Introduction

You've asked ChatGPT a question. The answer seems reasonable, but is it the best answer? Would Claude give better advice? What about Gemini?

Manually comparing AI models is tedious—copying questions between tabs, waiting for responses, trying to remember what each one said.

AI comparison tools solve this problem by querying multiple AI models simultaneously and presenting responses side-by-side. This guide covers why you need comparison tools, what's available, and how to use them effectively.

---

Why Compare AI Responses?

Models Disagree More Than You Think

We analyzed 500 complex queries across GPT-5, Claude, Gemini, and DeepSeek:

| Agreement Level | Percentage |

|---|---|

| Full agreement | 48% |

| Partial agreement | 31% |

| Significant disagreement | 21% |

Different Strengths

Each AI model has different capabilities:

| Model | Primary Strength |

|---|---|

| GPT-5 | Versatile, polished responses |

| Claude | Nuanced analysis, long documents |

| Gemini | Current information, Google integration |

| DeepSeek | Technical/math, cost-effective |

| Llama | Open-source, customizable |

Quality Assurance

When multiple AI models agree on an answer:

- Higher confidence in accuracy

- Reduced hallucination risk

- More reliable for important decisions

- Topic requires investigation

- Shows genuine complexity

- Identifies areas of uncertainty

Types of AI Comparison Tools

Side-by-Side Comparison

How it works: Query multiple models, display responses next to each other. Best for: Visual comparison, quick assessment Examples: TypingMind, ChatHubConsensus Tools

How it works: Query multiple models, synthesize into unified analysis showing agreement and disagreement. Best for: Understanding what models agree on, identifying uncertainty Examples: CouncilMindMulti-Model Chat

How it works: Switch between models within one conversation. Best for: Trying different models for different parts of a task Examples: Poe, ChatPlaygroundBenchmark Tools

How it works: Run standardized tests across models, measure performance. Best for: Objective capability comparison Examples: OpenRouter, LMSYS Chatbot Arena---

Top AI Comparison Tools in 2025

1. CouncilMind ⭐ Best for Consensus

What it does: Queries 15+ AI models simultaneously, enables multi-round discussions between models, synthesizes consensus. Strengths:- Automated consensus analysis

- Multi-round model discussions

- Points of agreement/disagreement highlighted

- Confidence scoring

- All major frontier models included

---

2. ChatHub

What it does: Browser extension for side-by-side AI comparison. Strengths:- Works in browser

- Multiple models simultaneously

- Simple interface

- Requires separate API keys

- No synthesis/consensus

- Limited model selection

---

3. TypingMind

What it does: Multi-model interface with side-by-side comparison. Strengths:- Clean interface

- Prompt library

- Multiple workspace support

- Bring your own API keys

- No automated synthesis

- Setup required

---

4. Poe (by Quora)

What it does: Access multiple AI models through single subscription. Strengths:- Many models available

- Mobile apps

- Custom bots

- Not truly side-by-side

- Usage limits per model

- No consensus features

---

5. LMSYS Chatbot Arena

What it does: Blind A/B testing of AI models. Strengths:- Unbiased comparison

- Community ratings

- Free to use

- Only 2 models at once

- Random model selection

- Research-focused

---

How to Compare AI Models Effectively

Step 1: Define Your Use Case

Be specific about what you need:

- "I need help with Python debugging"

- "I need analysis of a 50-page document"

- "I need current market research"

Step 2: Create Test Queries

Develop 3-5 representative queries:

- Simple factual question (baseline)

- Complex analytical question

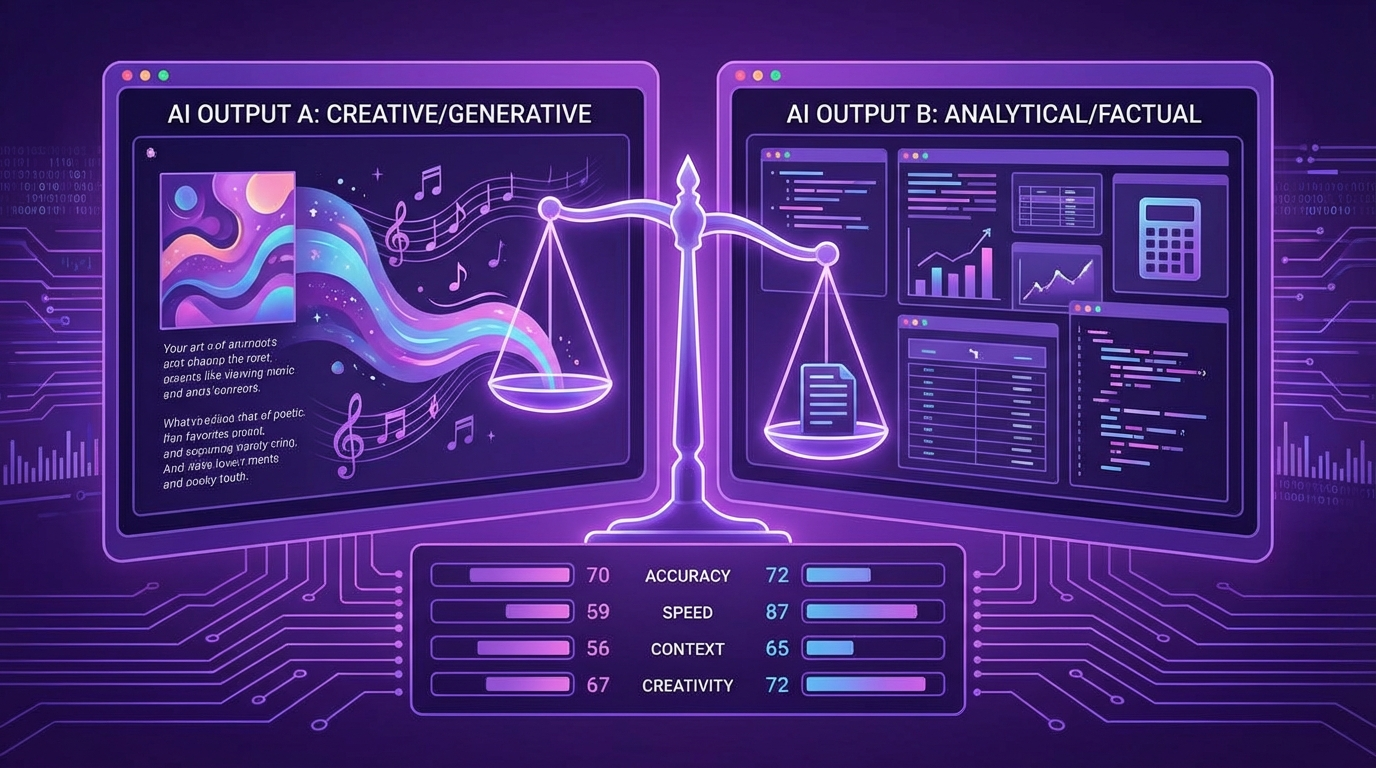

- Creative/generation task

- Technical/specialized question

- Current events question

Step 3: Run Comparison

Query all models with identical prompts. Note:

- Response quality

- Accuracy

- Nuance and caveats

- Speed

- Formatting

Step 4: Analyze Patterns

Look for:

- Which model consistently performs best for YOUR needs

- Where models agree (high confidence areas)

- Where models disagree (investigate further)

- Unique insights from each model

Step 5: Choose Your Approach

Based on findings:

- One model clearly best: Use that model

- Different models for different tasks: Route accordingly

- Need maximum reliability: Use consensus tool

Comparison Strategies by Use Case

For Important Decisions

Strategy: Use consensus tool Why: Multiple perspectives reduce error risk Tool: CouncilMind Example workflow:- Enter decision question

- Review all model responses

- Note consensus points (proceed confidently)

- Investigate disagreement points

- Make informed decision

For Finding Best Model

Strategy: A/B testing across tasks Why: Find optimal model for your work Tool: ChatHub, TypingMind Example workflow:- Define 5-10 representative tasks

- Test each across models

- Score quality per model per task

- Identify patterns

- Choose primary model (with backup for weak areas)

For Research

Strategy: Multi-model query + synthesis Why: Comprehensive coverage, reduced blind spots Tool: CouncilMind, manual comparison Example workflow:- Research question to multiple models

- Compare factual claims

- Note where sources agree

- Investigate disagreements

- Synthesize final understanding

What to Look for When Comparing

Response Quality

- Accuracy of information

- Depth of analysis

- Relevance to question

- Actionable insights

Nuance and Caveats

- Does the model acknowledge uncertainty?

- Does it present multiple perspectives?

- Does it note limitations?

Formatting

- Well-structured responses

- Appropriate use of headers, lists

- Easy to read and use

Speed

- Time to first response

- Total generation time

- Streaming smoothness

Consistency

- Does quality vary across queries?

- Are there surprising failures?

- Reliable for production use?

Building Your Comparison Workflow

Casual Users

- Use free Poe tier to access multiple models

- Ask important questions to 2-3 models

- Note obvious differences

- Pick the model that fits best

Regular Users

- Use CouncilMind for important decisions

- Default to preferred model for routine tasks

- Compare when unsure

- Update preferences as models evolve

Power Users

- API access to multiple models

- Automated routing based on task type

- Systematic quality monitoring

- Regular re-evaluation of model choices

Enterprise Users

- Centralized multi-model platform

- Compliance and security controls

- Usage analytics and cost optimization

- Custom fine-tuned model integration

Cost Considerations

Per-Model Subscriptions

| Model | Monthly Cost |

|---|---|

| ChatGPT Plus | $20 |

| Claude Pro | $20 |

| Gemini Advanced | $20 |

| Total for 3 | $60 |

Comparison Tools

| Tool | Monthly Cost | Models Included |

|---|---|---|

| CouncilMind Pro | $29 | 15+ models |

| Poe Premium | $17 | Multiple models |

| TypingMind | $79 one-time | BYOK |

Verdict

Comparison tools typically cost less than multiple individual subscriptions while providing more value through synthesis and consensus features.

---

Conclusion

AI comparison tools transform how you use AI:

- See how different models answer the same question

- Find the best model for your needs

- Get more reliable answers through consensus

- Save time vs. manual comparison

---

Frequently Asked Questions

What is the best AI comparison tool?

CouncilMind is the most comprehensive AI comparison tool, querying 15+ models simultaneously with automated consensus synthesis. For manual comparison, ChatHub offers free browser-based comparison.How do I compare ChatGPT and Claude?

Ask both the same question and compare responses. For systematic comparison, use a dedicated comparison tool that shows responses side-by-side. Look for accuracy, nuance, and how they handle uncertainty.

Is comparing AI models worth the effort?

For important decisions, absolutely. AI models disagree 30-40% of the time on complex questions. Comparison reveals which answer to trust and surfaces the true complexity of your question.

> Related: Compare AI Models: GPT-5 vs Claude vs Gemini | ChatGPT Alternatives | AI Consensus Tool